The Benefits of Third-Party App Integrations

Third-party app integrations have become foundational to modern software development. From streamlining workflows to accelerating feature deployment, integrations help organizations build more robust, feature-rich applications while focusing on their core value propositions.

Common Use Cases

- Authentication: Tools like Auth0 and PropelAuth simplify user sign-up/login workflows.

- Monitoring & Observability: Platforms like Datadog and New Relic provide insights into performance and uptime.

- Error Reporting: Tools such as Sentry and Bugsnag alert developers to issues as they happen.

- Sales & Marketing: CRMs like Salesforce and HubSpot help drive customer acquisition and retention.

- Web Analytics: Google Analytics, Mixpanel, and Segment offer behavioral insights.

- LLM Integrations: AI tools like OpenAI, Anthropic, Google Gemini and others are rapidly being integrated into workflows for customer support, content generation, and internal knowledge search.

Advantages

- Faster Time to Market: Teams can deliver features rapidly by avoiding the overhead of building everything in-house.

- Reduced Development Costs: Buying best-in-class functionality is often cheaper than building and maintaining it.

- Engineering Focus: Developers can focus on what differentiates their product instead of reinventing common tools.

The Dangers of Third-Party App Integrations

While third-party services unlock massive benefits, they also introduce risks, especially when privacy isn’t embedded by design.

SDKs Full of Security Risks

Most integrations rely on SDKs that introduce:

- Open-Source Vulnerabilities: Malicious or outdated dependencies.

Example: The event-stream incident, where a widely used npm package was found to include a malicious dependency targeting crypto wallets. - Scope Creep: Once an SDK is embedded, it may request or collect more data than originally anticipated. These layers of abstraction make it difficult to identify data exposure risks.

Unintentional Data Sharing Risks

Despite the benefits, third-party integrations often become privacy minefields. Developers – and increasingly, AI code assistants – can unintentionally introduce risks by oversharing sensitive data with third-party services, bypassing established data processing agreements (DPAs).

Companies Apply Rigorous Reviews During Vendor Onboarding but Often Lack Continuous Monitoring to Ensure Agreed-Upon Data Flows Are Upheld

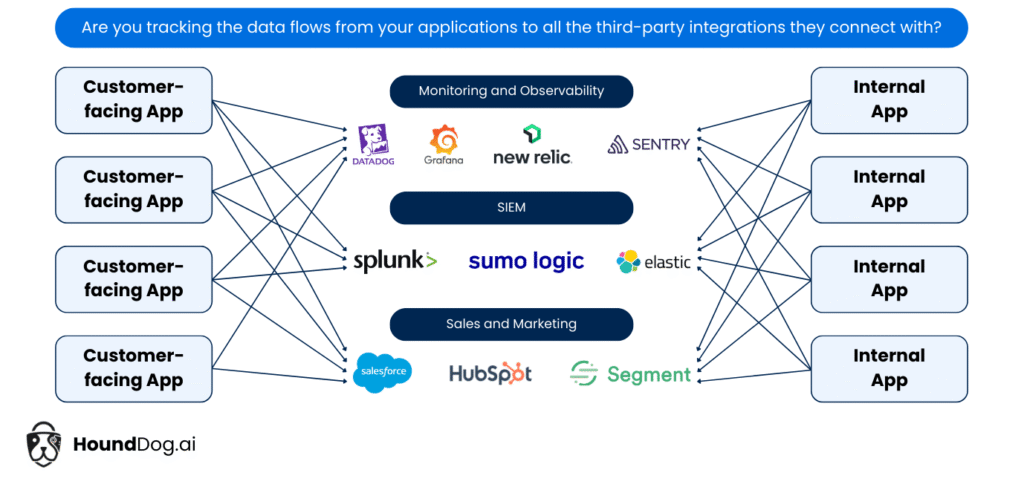

Assume that a company has developed a customer-facing application that integrates with Datadog for continuous monitoring, Google Analytics for tracking user sessions, Salesforce for updating customer data for sales and marketing purposes, and OpenAI to enable personalization. The appendix section of most Data Processing Agreements (DPAs) typically includes provisions to document the Categories of Data Subjects, Categories of Personal Information, Sensitive Data Processed, and the Nature/Purpose of Processing.

In this scenario, the agreed-upon categories of personal information allowed for each vendor are as follows:

| Platform | Categories of Personal Information Allowed in the DPA |

|---|---|

| Datadog | hostname, ipAddress, deviceType |

| OpenAI | role, industry, companySize, age, gender |

| Google Analytics | ipAddress, deviceType, browserUsed |

| Salesforce | firstName, lastName, email, phoneNumber, role, companyName, industry |

While security, privacy, and third-party risk management teams often spend significant time during the vendor onboarding process ensuring that vendors meet compliance requirements and agree to the terms of the DPA, unfortunately, many companies stop there. Once a vendor is onboarded, few controls are typically put in place to continuously monitor adherence to the agreed-upon data flows.

This is a critical gap. It’s not just the vendor’s responsibility to uphold the DPA—your own developers play a major role. If an engineer mistakenly sends unauthorized fields (such as email or SSN) to a vendor like Datadog or OpenAI, the breach originates from your side—even if the vendor’s own systems are secure and compliant.

Once that sensitive data reaches a third-party system, you’re at the mercy of their internal data handling practices. In many cases, the data becomes deeply embedded within their ecosystem—replicated across logs, caches, dashboards, backups, and internal analytics tools. Deleting or correcting that data after the fact can be operationally complex and legally uncertain.

The bottom line: Strong vendor onboarding is not enough. Organizations must adopt continuous controls to ensure data sharing practices in the code stay aligned with what was contractually agreed. Without this, the risk of data overexposure is not just theoretical—it’s inevitable.

Real-Life Examples of Sensitive Data Leaks in Third-Party Integrations

Accidental Logging or Sharing of Entire User Objects

As developers build integrations with analytics, observability, or CRM tools, it’s common to pass contextual data to these platforms for better insights. However, without clear guardrails, developers – or AI coding assistants – may accidentally transmit full user objects, leading to the exposure of personally identifiable information (PII) such as names, emails, phone numbers, and even Social Security Numbers (SSNs). This often happens when objects are spread into function parameters ({ …user }) or logged without filtering.

Below are real-world inspired examples using a shared User object that highlight how this mistake can surface across common platforms:

👤 User Object Used in All Examples

interface User {

id: string;

email: string;

ssn: string;

firstName: string;

lastName: string;

phoneNumber: string;

role: string;

companyName: string;

industry: string;

}🟠 Example 1: Datadog – Accidental Logging or Sharing of Entire User Objects

import { createLogger } from '@datadog/browser-logs';

const datadogLogger = createLogger({

clientToken: 'DATADOG_CLIENT_TOKEN',

site: 'datadoghq.com',

service: 'your-app',

env: 'production',

forwardErrorsToLogs: true,

sampleRate: 100,

});

function getSystemInfo() {

return {

deviceType: /Mobi|Android/i.test(navigator.userAgent) ? "mobile" : "desktop",

ipAddress: "fetch-dynamically-from-server",

hostname: window.location.hostname,

};

}

function handleLogin(user: User) {

// ❌ BAD

datadogLogger.info("User logged in", { user });

// ✅ GOOD

const { deviceType, ipAddress, hostname } = getSystemInfo();

datadogLogger.info("User logged in", {

deviceType,

ipAddress,

hostname,

});

}Why It’s Risky:

Datadog’s DPA allows metadata like hostname, ipAddress, and deviceType. Logging the full user object violates this agreement and may expose sensitive data into Datadog logs, which are hard to scrub post-ingestion.

🟠 Example 2: Google Analytics – Accidental Logging or Sharing of Entire User Objects

declare function gtag(event: string, action: string, params: Record<string, any>): void;

function getDeviceInfo() {

return {

deviceType: /Mobi|Android/i.test(navigator.userAgent) ? "mobile" : "desktop",

browserUsed: (() => {

const ua = navigator.userAgent;

if (ua.includes("Chrome")) return "Chrome";

if (ua.includes("Firefox")) return "Firefox";

if (ua.includes("Safari") && !ua.includes("Chrome")) return "Safari";

return "Other";

})(),

ipAddress: "fetch-from-server",

};

}

function trackUserSignup(user: User) {

// ❌ BAD

gtag("event", "user_signup", { ...user });

// ✅ GOOD

const { deviceType, browserUsed, ipAddress } = getDeviceInfo();

gtag("event", "user_signup", {

deviceType,

browserUsed,

ipAddress,

});

}Why It’s Risky:

Google Analytics is not contractually permitted to receive PII like names, emails, or SSNs. Sending the full user object – especially via object spread – can leak sensitive information that is stored and processed against DPA terms.

🟠 Example 3: Salesforce – Accidental Logging or Sharing of Entire User Objects

function sendToSalesforce(event: string, data: Record<string, any>) {

console.log(`Sending to Salesforce: ${event}`, data);

}

function syncUserToSalesforce(user: User) {

// ❌ BAD

sendToSalesforce("lead_create", { ...user });

// ✅ GOOD

sendToSalesforce("lead_create", {

firstName: user.firstName,

lastName: user.lastName,

email: user.email,

phoneNumber: user.phoneNumber,

companyName: user.companyName,

role: user.role,

industry: user.industry,

});

}Why It’s Risky:

Although Salesforce may allow many fields under the DPA (name, contact info, etc.), PII like SSNs and user IDs are typically out of scope. Spreading the full object risks violating these agreements, especially if data visibility in Salesforce is not tightly controlled.

Accidental Logging or Sharing of Tainted Variables

Variables that begin clean may become “tainted” with PII. Developers and AI assistants often fail to catch this, especially when constructing dynamic prompts for AI models like OpenAI.

🟠 Example 4: OpenAI – Tainted Variable Used in Prompt

import { OpenAI } from "openai";

const openai = new OpenAI({ apiKey: process.env.OPENAI_API_KEY });

async function generatePersonalizedMessage(user: User) {

// ✅ Initially clean variable

let promptContext = {

audience: "Customer",

notes: "Welcome to our platform.",

};

// ❌ BAD: Variable becomes tainted with PII

if (user.firstName && user.email) {

promptContext.audience = `${user.firstName} ${user.lastName}`; // now tainted with PII

promptContext.notes = `Welcome ${user.email} to the ${user.industry} platform.`; // tainted

}

const prompt = `Generate a greeting for: ${promptContext.audience}. Message: ${promptContext.notes}`;

const response = await openai.chat.completions.create({

model: "gpt-4",

messages: [{ role: "user", content: prompt }],

});

// ✅ GOOD: Use only permitted metadata in prompt

// const prompt = `Generate a welcome message for a ${user.role} in the ${user.industry} sector.`;

}

📊 Data Processing Agreement (DPA) Breach Summary Table

| Scenario | Platform | ❌ Breach (Not Allowed by DPA) | ✅ Allowed by DPA |

| Accidental sharing of user object to Datadog | Datadog | email, ssn, firstName, lastName, phoneNumber, role, companyName, industry | hostname, ipAddress, deviceType |

| Tainted variables used in OpenAI prompt | OpenAI | email, firstName, lastName | role, industry, companySize, age, gender |

| Accidental sharing of user object to Google Analytics | Google Analytics | email, ssn, firstName, lastName, phoneNumber, role, companyName, industry | ipAddress, deviceType, browserUsed |

| Accidental sharing of user object Salesforce | Salesforce | ssn | firstName, lastName, email, phoneNumber, role, companyName, industry |

Policy Violations by Framework

When sensitive data is shared with third-party integrations beyond the scope of an established Data Processing Agreement (DPA), it constitutes a clear violation of applicable regulations, including:

- Personally Identifiable Information (PII): GDPR, CCPA, GLBA, PIPEDA, APPI, NIST 800-53, ISO/IEC 29100, and similar laws

- Protected Health Information (PHI): HIPAA

- Cardhold Data (CHD): PCI DSS

✅ Best Practices

- Avoid sending complete user objects to third-party services.

- Sanitize sensitive data only when its collection is strictly necessary. Prioritize data minimization-if the data isn’t essential, exclude it entirely. This approach is more secure than relying on sanitization alone, especially for LLM prompts and data sent to analytics or observability platforms.

- Refer to your Data Processing Agreement (DPA) and enforce permitted fields through code.

- Build utility functions that extract and return only the data fields allowed under your compliance requirements.

Current Methods of Tracking Third-Party Data Flows to Integrations

📊 Tracking & Sanitizing Data Flows to Third-Party Integrations

| Method | Layer | Pros | Cons |

| Static Code Analysis | Code Layer | – Early detection (pre-deployment) – Scales across repos – Enforces privacy by design – Works for developer and AI-generated code | – May miss runtime-generated data |

| Manual Code Reviews | Code Layer | – Human judgment – Can catch complex context-based issues | – Time-consuming – Not scalable – Prone to human error |

| API Gateway Monitoring (e.g., Kong) | API Layer | – Centralized control over API traffic – Can log, redact, or block | – Requires all traffic to pass through gateway – Misses traffic that bypasses the gateway (e.g., SDKs, internal services) |

| Network Proxy (e.g., Envoy) | Network Layer | – No need to modify app code- Can log encrypted traffic (with effort) | – Hard to scale across microservices – Lacks understanding of data context or meaning |

| Data Loss Prevention (DLP) Tools | Network / Storage | – Detects sensitive data in transit or at rest – Integrates with broader security stack | – Reactive, not preventative – Lacks visibility into app-layer data flows and third-party SDKs |

🔍 Note: While API and network-level tools provide valuable safeguards, they are fundamentally reactive. These solutions sanitize data in transit but do not prevent the collection of unnecessary data, falling short of enforcing true data minimization – a cornerstone of privacy by design.

DIY PII Detection in Code Scanning Doesn’t Scale

Hardcoded RegEx rules are brittle, difficult to maintain, and often limited to basic log detection. Most DIY efforts stall before scaling meaningfully-especially when it comes to tracking data flows through third-party SDKs.

These efforts lack:

- Context around data sensitivity

- Awareness of sanitization or transformations

- Visibility into where data ends up (sinks)

Complexity grows exponentially when trying to account for:

- Every RegEx variation for each sensitive data type

- Variations in field names and object nesting

- All SDK invocations scattered across large codebases

As codebases evolve, it becomes nearly impossible to maintain accurate coverage – making DIY approaches unsustainable for privacy and compliance at scale.

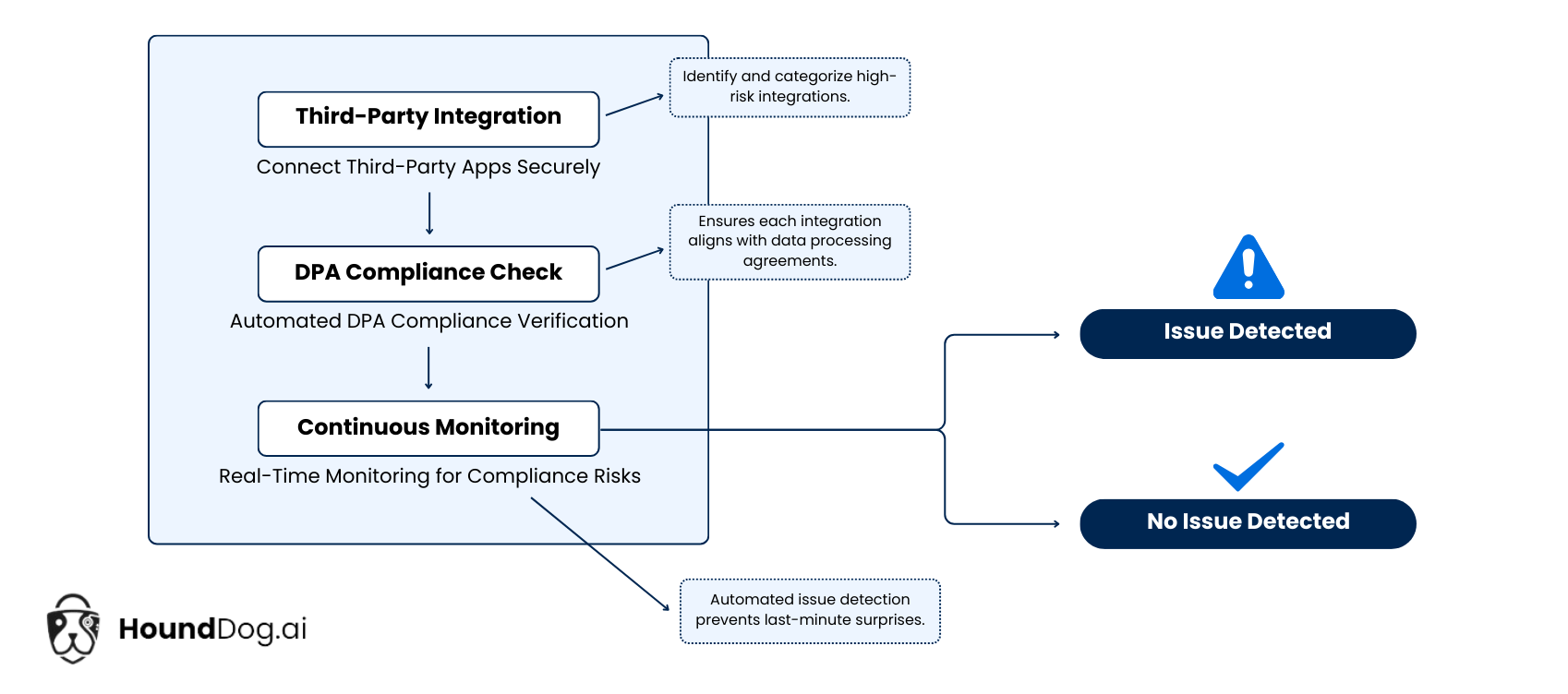

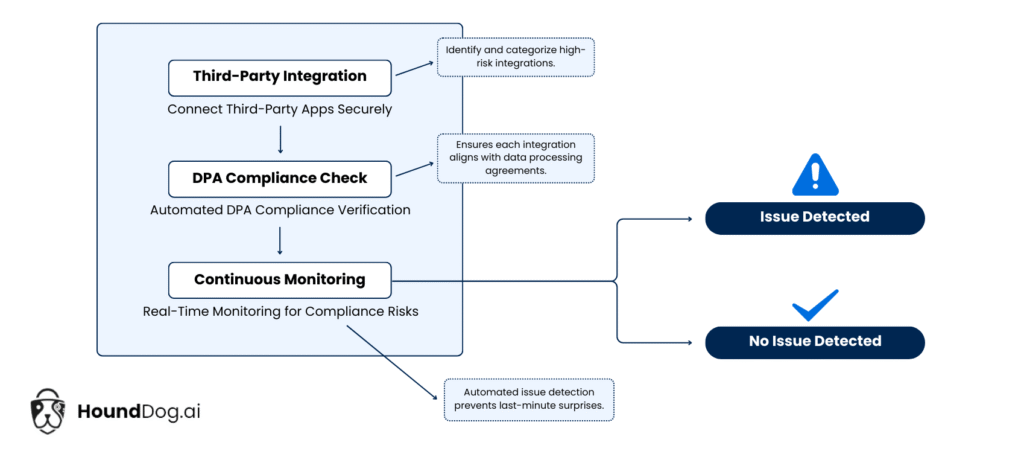

HoundDog.ai – The Privacy-by-Design Code Scanner Purpose-Built for PII Detection and Data Mapping

HoundDog.ai empowers security, privacy, and engineering teams to catch sensitive data leaks and privacy risks before code is deployed. Built from the ground up to enforce privacy by design, our static code scanner enforces data minimization and maps sensitive data flows across all storage mediums and third-party integrations – all directly within your source code.

⚡️ Blazing Fast. Built in Rust for Scale.

Our scanner is written entirely in Rust, making it extremely fast and lightweight. It can scan millions of lines of code in under a minute, with virtually no impact on developer velocity.

Perfect for:

- Large monolithic or microservices codebases

- High-frequency CI/CD pipelines

- Multi-language repositories

🔍 Unmatched Detection Accuracy Across the Full Data Lifecycle

HoundDog.ai goes far beyond regular expressions, delivering precise, context-aware detection of:

- Sensitive Data Elements: PII, PHI, PIFI, CHD, and other regulated identifiers

- Risky Data Sinks: Including hundreds of third-party tools and SDKs across observability, analytics, sales, marketing, and AI

- Sanitization Gaps: Flags data only when it is unsanitized, reducing noise and surfacing real risks

With HoundDog.ai, you gain visibility into what data is handled, how it’s transformed, and where it’s going – across your entire codebase.

🔧 Endlessly Flexible and Built for Compliance

Tailor detection logic to your unique tech stack and regulatory requirements:

- Define custom data element types based on internal policies or legal obligations

- Apply granular allowlists to enforce which data elements are permitted per data sink or third-party integration – upholding your data processing agreements and privacy policies

- Add custom sanitization functions to meet your internal security standards

Whether you’re aligning with GDPR, HIPAA, PCI DSS, or internal policies, HoundDog.ai adapts to your needs.

🧱 Enterprise-Ready. Developer-First. CI-Integrated.

HoundDog.ai fits directly into your existing engineering workflows:

- Code Repository Integration: Connect to GitHub, GitLab, or Bitbucket. Scan pull requests, block risky changes, and leave actionable code comments.

- Managed Scans: Offload scan execution to HoundDog.ai for continuous, hands-off coverage across all repositories, complete with compliance-grade reporting.

- CI/CD Support: Inject scans into your pipelines via GitHub Actions, GitLab CI, Jenkins, etcI. Supports auto-commits, approvals, and self-hosted runners.

HoundDog.ai enforces privacy early – before code ships.

🤖 AI-Powered Detection Engine (Coming Q3 2025)

Our upcoming AI-powered engine takes detection to the next level by integrating with any LLM running in your environment – whether open-source, API-based, or self-hosted.

This enhancement will:

- Improve detection of data elements, risky data sinks, and sanitization gaps – with minimal manual tuning

This intelligent, LLM-integrated approach helps teams scale detection effortlessly and stay ahead of evolving privacy and compliance risks.

🔐 Privacy-by-Design for AI Applications

AI applications introduce a unique set of risks – and HoundDog.ai is purpose-built to address them. Our scanner detects sensitive data leaks in AI-specific mediums, including:

- Prompt logs

- Embedding stores

- Temporary files

It also flags:

- Unsanitized inputs passed into LLMs

- Unfiltered outputs returned by AI models

This ensures that AI-generated content complies with your privacy standards and regulatory requirements – from the very first line of code.

Conclusion

Third-party integrations are vital, but they also introduce serious privacy risks. To stay compliant and protect user data, organizations must adopt a privacy-by-design mindset.

With HoundDog.ai, privacy is no longer an afterthought – it’s a continuous, integrated part of the development lifecycle.

Shift privacy left. Prevent breaches. Comply with confidence.