As Featured in Product Hunt’s Top 10

Learn moreShift-Left: Data's Best Defence

A lightweight, ultra-fast static code scanner designed to implement a proactive 'shift-left' strategy for PII leak prevention and privacy compliance.

The Problem

Delayed Detection of PII Leaks

92% of all data compromised in 2023 involved customer and employee PII record types.

Remediation of PII data leaks can be very expensive, requiring code updates, access log reviews, and potentially customer notifications.

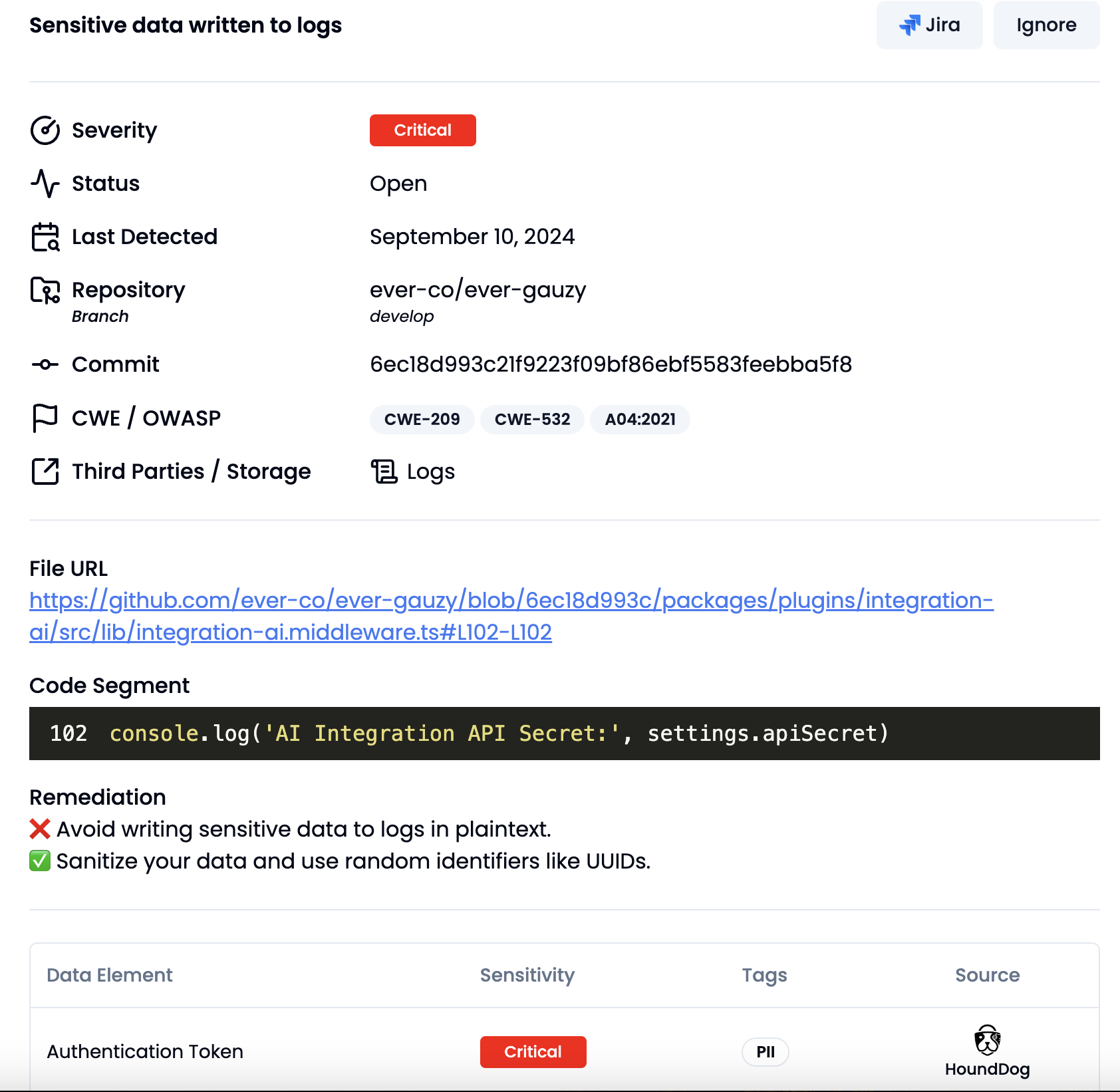

SAST scanners overlook vulnerabilities related to PII leaks, while DLP platforms react only after data is in production and fail to address how PII leaks can spread to other systems, such as when logs containing leaked PII are ingested by monitoring or SIEM platforms.

Reactive and Error-Prone Processes for Privacy Compliance

Product development outpaces privacy teams, leading to a constant need to update outdated data maps. Most privacy teams continue to rely on surveys and spreadsheets for data collection, increasing the risk of incorrect records of processing activities for GDPR compliance.

Tracking and managing data exchanges with third-party vendors to ensure adherence to data processing agreements is extremely challenging, increasing the risk of compliance violations for organizations.

The Solution

Implementing Data Security and Privacy Controls at the Code Level

HoundDog.ai for Proactive Sensitive Data Protection

-

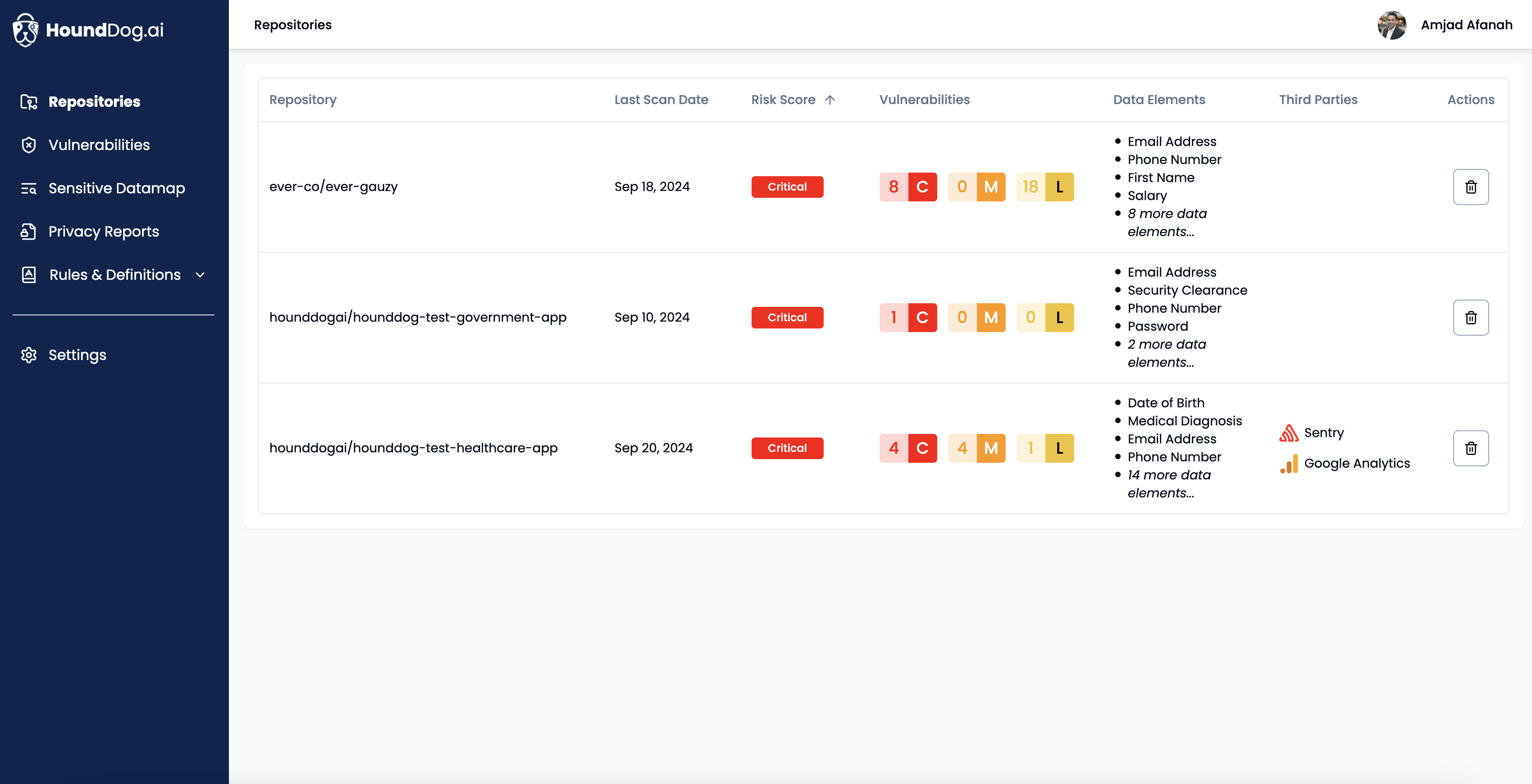

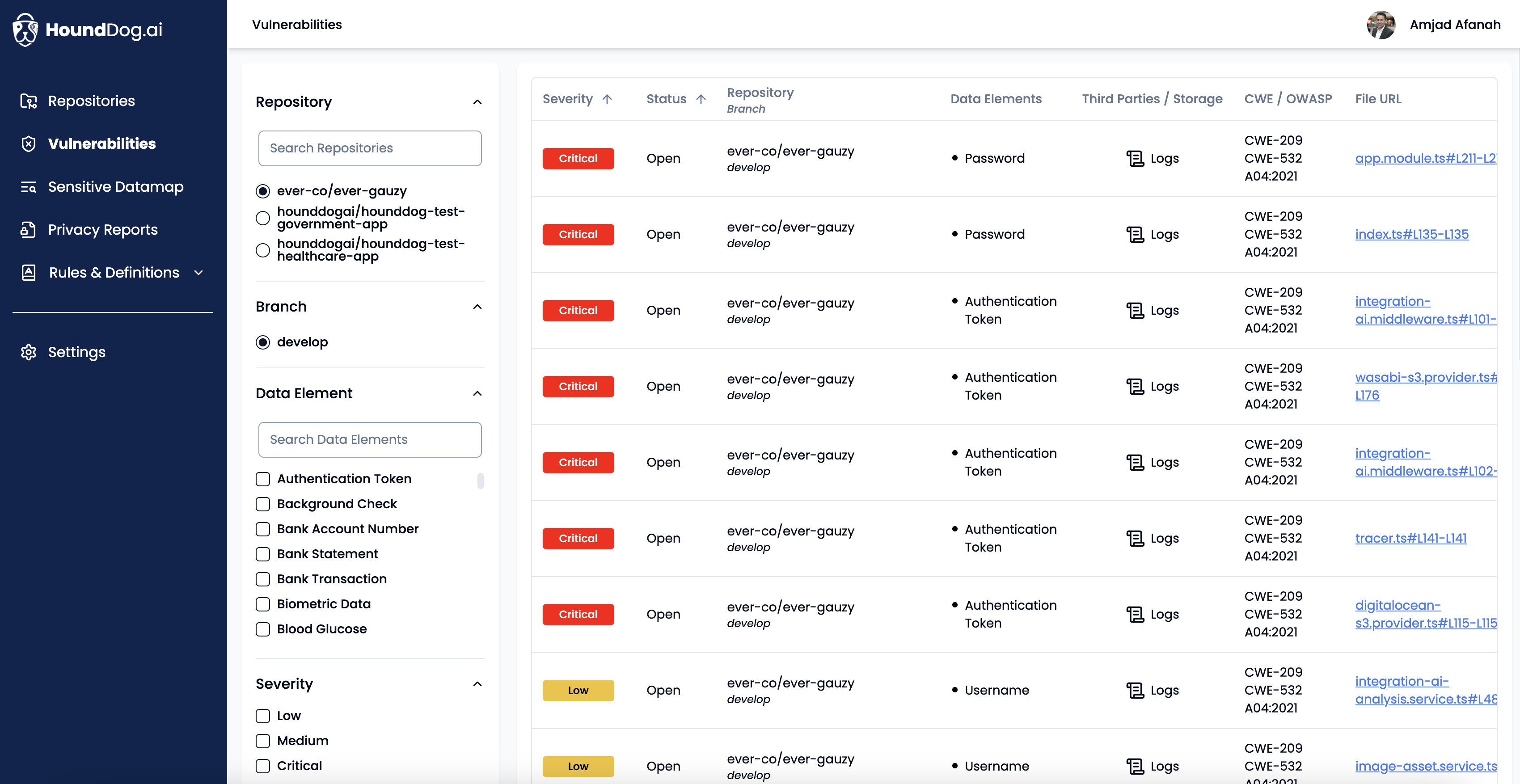

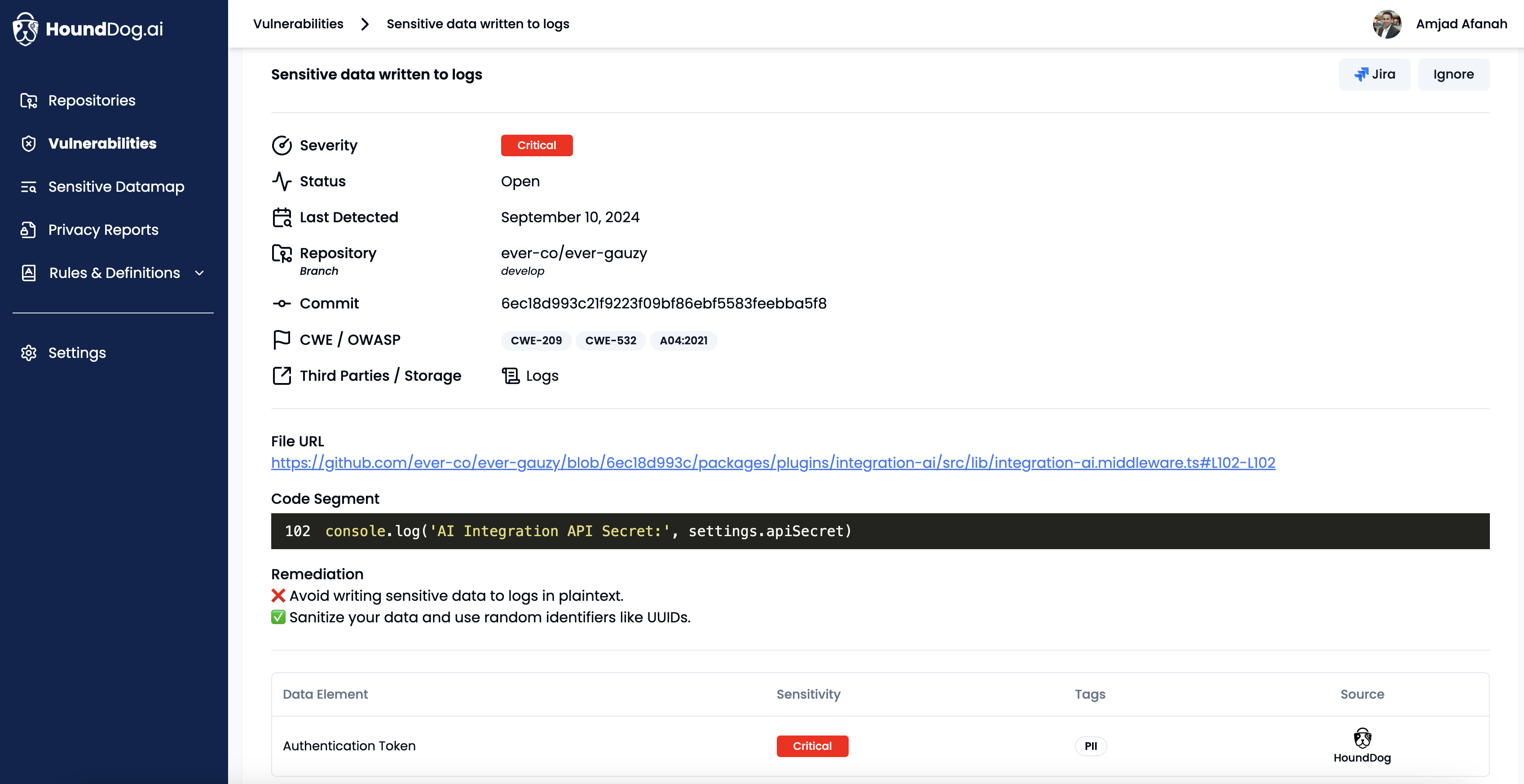

Use HoundDog.ai’s AI-powered code scanner to continuously detect vulnerabilities (currently overlooked by SAST scanners) where sensitive data (e.g., PII, PIFI, and PHI) is exposed in plaintext through mediums such as logs, files, tokens, cookies, or third-party systems

[CWE-201, CWE-209, CWE-312, CWE-313, CWE-315, CWE-532, CWE-539] -

Get essential context and remediation strategies, such as omitting sensitive data, applying masking or obfuscation, or using UUIDs instead of PII

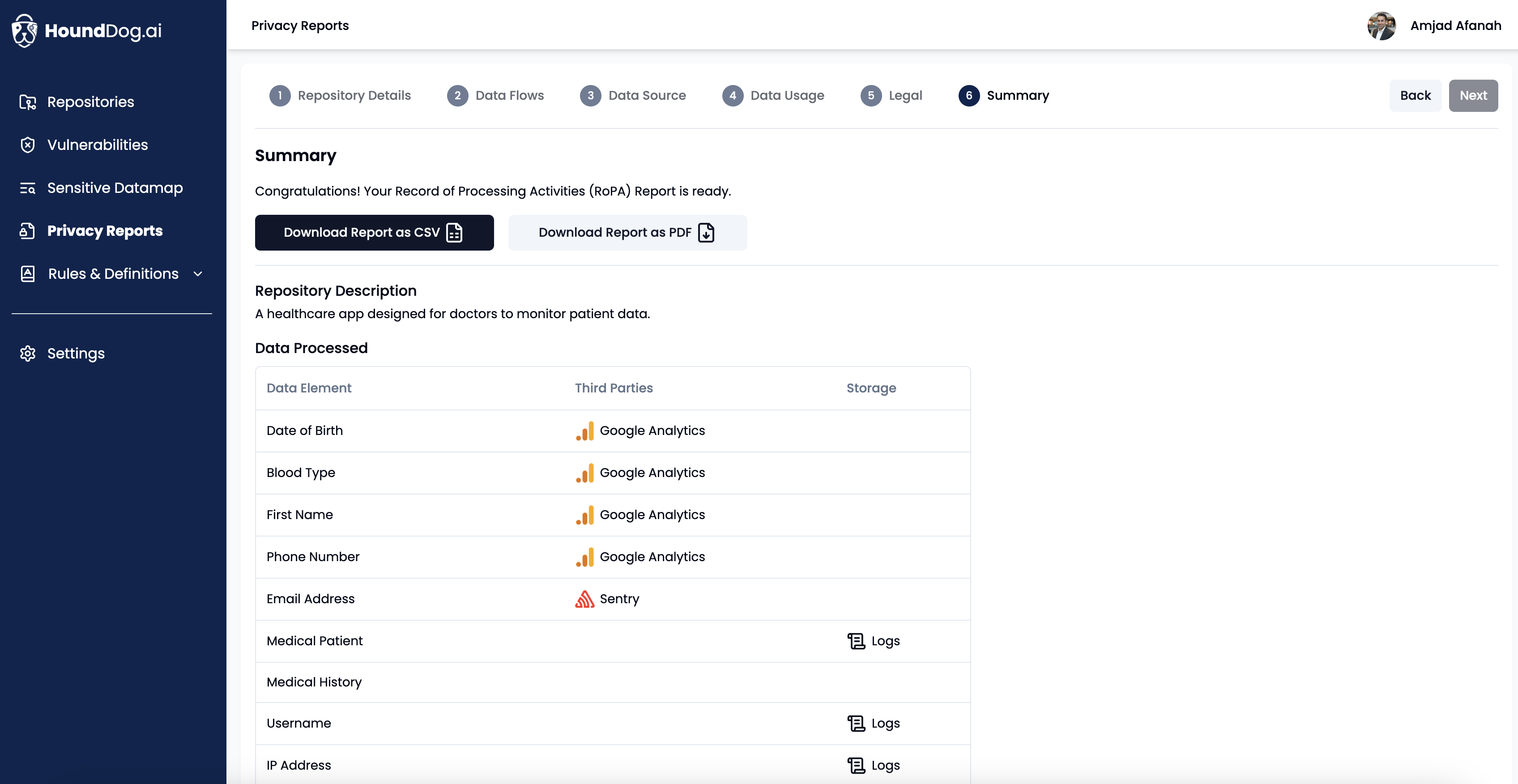

HoundDog.ai for Privacy Compliance Automation

-

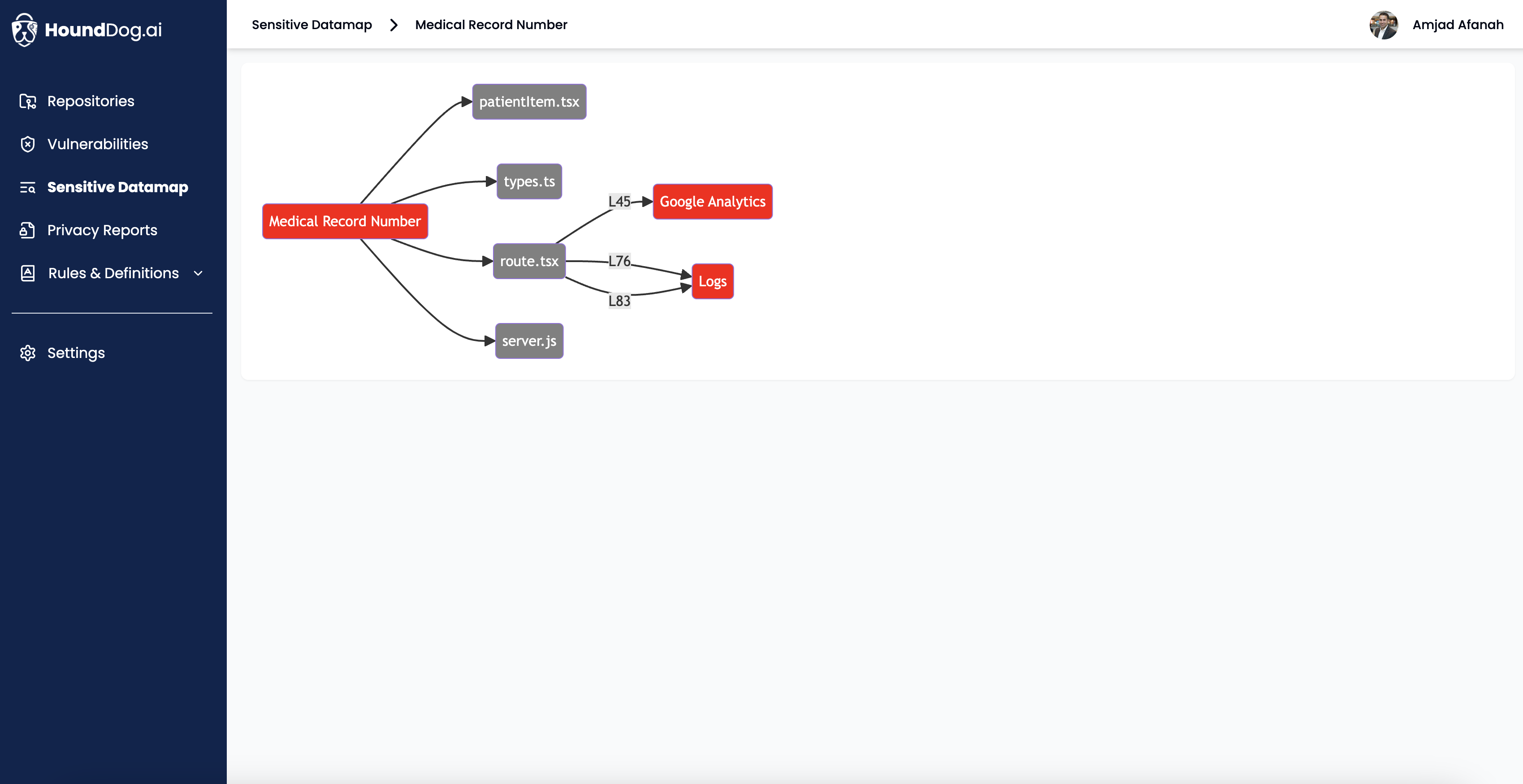

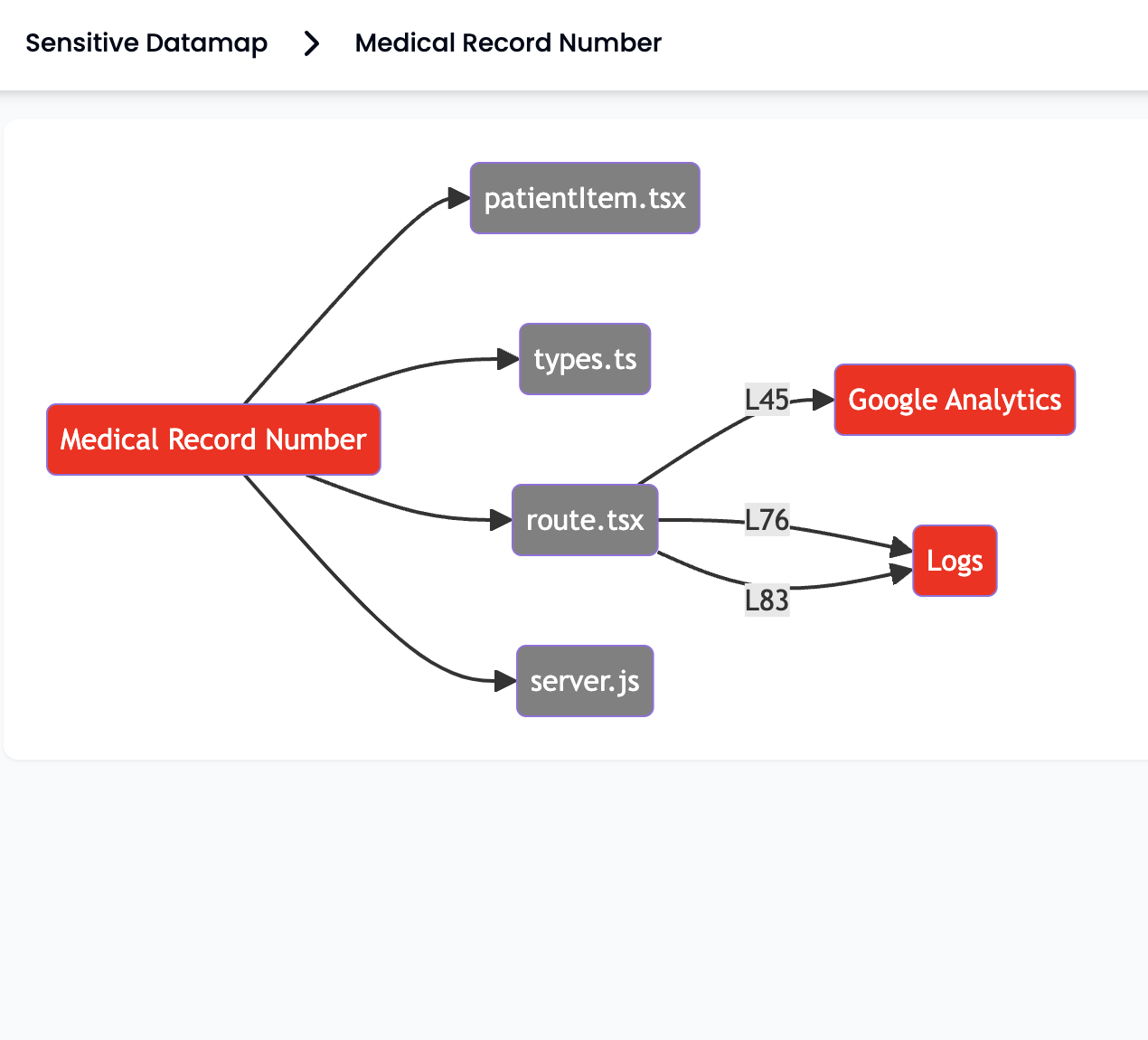

Use HoundDog.ai's AI-powered code scanner to continuously track and visualize the flow of sensitive data (e.g., PII, PIFI, and PHI). Generate Records of Processing Activities (RoPA) with a few clicks and keep pace with PII changes at the speed of development.

-

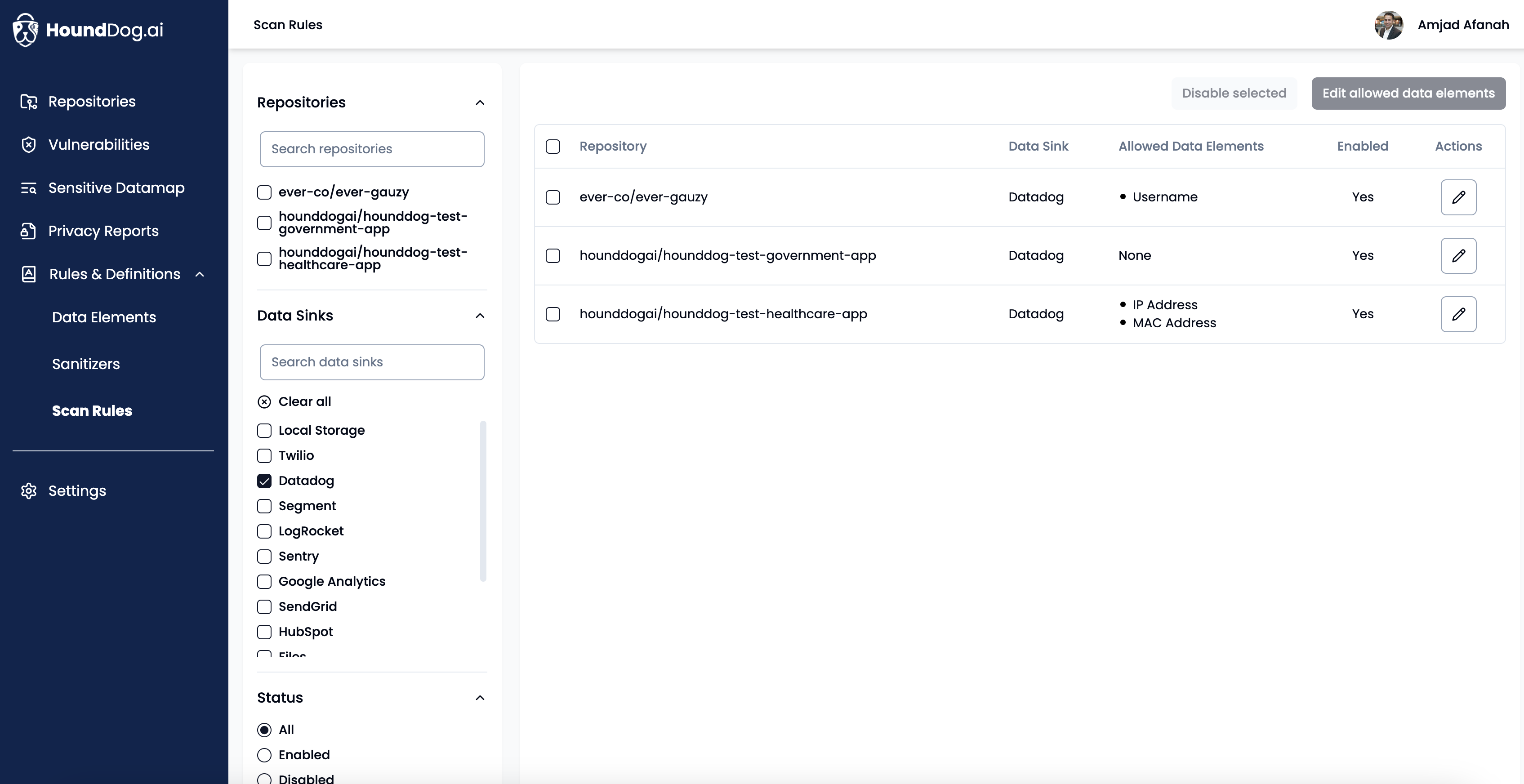

Receive proactive alerts to avoid surprises caused by product changes introducing new PII without proper reviews or by third-party integrations that violate accepted data processing agreements. Catch these issues in development to avoid dealing with the more expensive repercussions when discovered later in production.

Return On Investment

ROI for Proactive Sensitive Data Protection

ROI for Automated Privacy Compliance

Enhance your AppSec Program by Incorporating Sensitive Data Protection and Adopt a Shift-Left Approach to Privacy Compliance

Unparalleled Coverage and Accuracy

Frictionlessly Fast

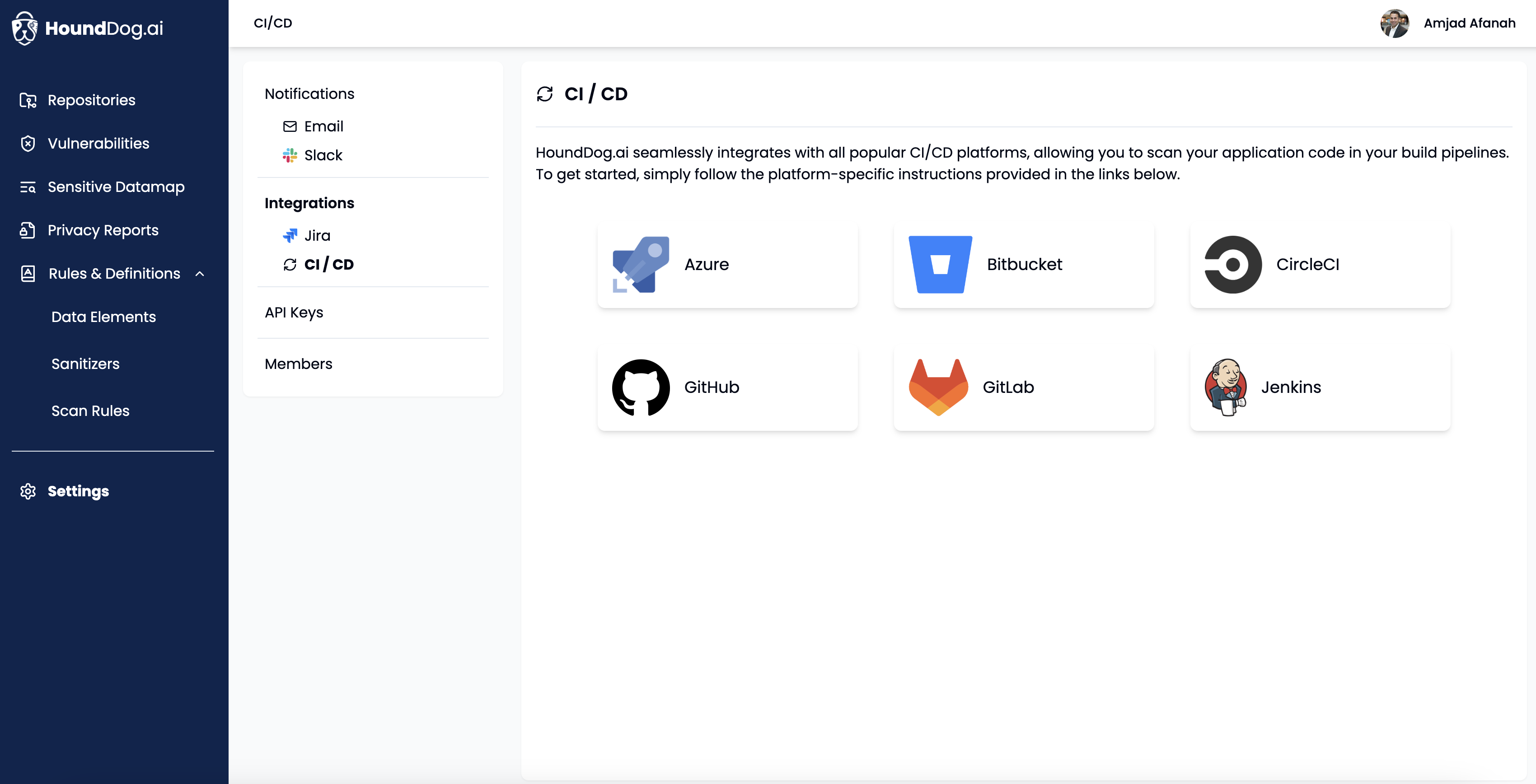

Plugs Seamlessly into Developer Workflows

Enterprise Ready

Sensitive Data Protection at the Speed of Development

Juvare

Stop PII Data Leaks at the Source and Automate Data Mapping for Compliance

Through its shift-left approach, HoundDog.ai helps organizations integrate data security and privacy controls from the start. Start for free or book a live demo to better understand the product’s capabilities and pricing.